I’m reading William MacAskill’s “Doing Good Better,” and it’s motivated me to automate monthly donations to the most effective charities I can. In the past I’d set up automated donations, but when moving to Maui, moving costs, job changes, and higher costs of living required me to suspend them. For me, MacAskill’s book about Effective Altruism has served as the reminder we all occasionally need, and my hope is that this post will serve as the reminder for others.

MacAskill writes of “The 100x Multiplier”, which is the idea that as citizens of the most economically developed societies, our resources can do 100 times more good for the poorest on earth than they can do for ourselves. In other words, the amount of benefit the poorest in the world would derive from $1 is about the same I would derive from $100. This is a very powerful idea, especially considering how insanely easy it is to donate to some of the most effective charities in the world, regularly and automatically.

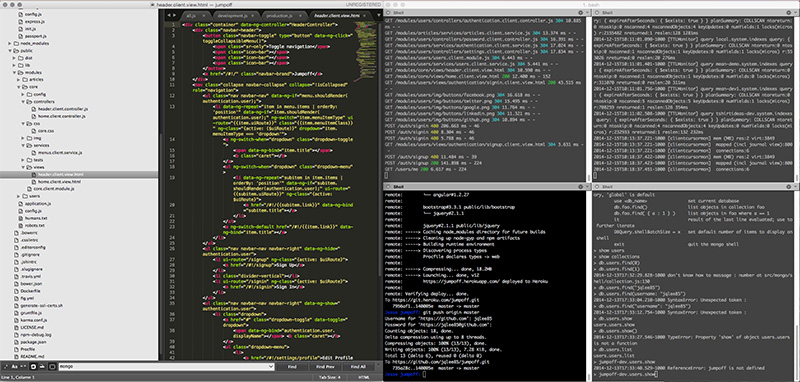

GiveWell.org does thorough research on which charities are most effective in doing good. I just went to this link: https://secure.givewell.org/donate-online, and set up a recurring monthly donation to Deworm The World and GiveDirectly. I timed it on my phone, it took 1:36.29 (1 min 36 sec). That includes me looking around for my wallet and my autofill settings putting in the wrong address.

The amount we each can give varies, but almost anyone reading this blog can afford to set up something that will automatically come from their bank account, and they likely won’t even notice. It’s easy to get caught up in our day to day lives and forget about the extraordinary position we’re in the help those who were born into much poorer economic circumstances. This is why it’s so important to automate these types of donation decisions, to make giving the default, and when our economic circumstances change, we can dial back donations if need be. But we should always keep in mind that what seems like a little for us, can make a huge difference for others.